s3cmd is a command line utility used for creating s3 buckets, uploading, retrieving and managing data to Amazon s3 storage. Let’s see how to use install s3cmd and manage s3 buckets via command line in easy steps.

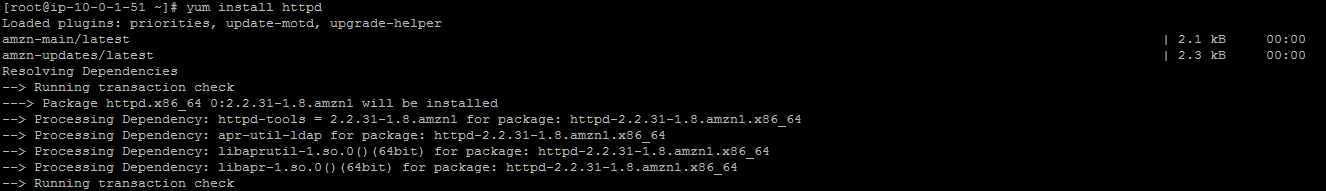

Follow the steps below to install S3cmd tool :

- wget http://sourceforge.net/projects/s3tools/files/s3cmd/1.5.0-alpha1/s3cmd-1.5.0-alpha1.tar.gz

- tar -xvzf s3cmd-1.5.0-alpha1.tar.gz

- cd s3cmd-1.5.0-alpha1

- python setup.py install

- yum install python-magic

- cd

- s3cmd --configure

Commands:

1) To list all the buckets from s3

S3cmd ls

2) Create the bucket

[root@localhost ~]# s3cmd mb s3://priyaTest

Bucket 's3://priyaTest/' created

3) To list the bucket content

[root@localhost ~]# s3cmd ls s3://priyaTest/

2014-01-24 13:22 35 s3://priyaTest/S3put.txt

4) To put the single file into the s3

[root@localhost ~]# s3cmd put S3put.txt s3://priyaTest/

S3put.txt -> s3://priyaTest/S3put.txt [1 of 1]

35 of 35 100% in 0s 45.55 B/s done

5) Get the uploaded file from the s3 and verify that its hasn't been corrupted.

[root@localhost ~]# s3cmd get s3://priyaTest/S3put.txt S3put_1.txt

s3://priyaTest/S3put.txt -> S3put_1.txt [1 of 1]

35 of 35 100% in 2s 13.68 B/s done

Now there are two files:

[root@localhost ~]# ls -l | grep S3put

-rw-r--r--. 1 root root 35 Jan 28 20:42 S3put_1.txt

-rw-r--r--. 1 root root 35 Jan 24 05:20 S3put.txt

Check the file corruption:

[root@localhost ~]# md5sum S3put.txt S3put_1.txt

d15116439e554bfebe1355f969aa51d0 S3put.txt

d15116439e554bfebe1355f969aa51d0 S3put_1.txt

If Checksums of the original file matches with the retrieved one, then verification is successfully done (i.e. file is not corrupt).

6) Delete objects and bucket.

If any object is present into the bucket then bucket will not be deleted. Before deleting the bucket, clean up the objects which are present in that bucket.

[root@localhost ~]# s3cmd del s3://priyaTest/S3put.txt

File s3://priyaTest/S3put.txt deleted

[root@localhost ~]# s3cmd ls s3://priyaTest/

[root@localhost ~]#

[root@localhost ~]# s3cmd rb s3://priyaTest/

Bucket 's3://priyaTest/' removed

[root@localhost ~]#

7) To upload multiple files on s3

[root@localhost home]# s3cmd put -r Priya s3://priyaTest/

Priya/p4 -> s3://priyaTest/Priya/p4 [1 of 3]

11 of 11 100% in 3s 3.52 B/s done

Priya/priya -> s3://priyaTest/Priya/priya [2 of 3]

3 of 3 100% in 1s 2.84 B/s done

Priya/sync.txt -> s3://priyaTest/Priya/sync.txt [3 of 3]

35 of 35 100% in 0s 49.57 B/s done

OR

[root@localhost home]# s3cmd put --recursive Priya s3://priyaTest/

Priya/p4 -> s3://priyaTest/Priya/p4 [1 of 3]

11 of 11 100% in 2s 4.07 B/s done

Priya/priya -> s3://priyaTest/Priya/priya [2 of 3]

3 of 3 100% in 0s 4.14 B/s done

Priya/sync.txt -> s3://priyaTest/Priya/sync.txt [3 of 3]

35 of 35 100% in 0s 48.77 B/s done

[root@localhost home]#

8) S3cmd Sync

The files which do not exist in the destination in the same version are uploaded only by the s3cmd sync command. File size and md5checksum is performed by default. Sync checkst the details of files already present at the destination first and compares them with local files. It checks for md5 checksum or file size and then uploads the files which are different.

To test s3cmd create one new file as:

root@localhost Priya]# pwd

/home/Priya

[root@localhost Priya]# touch newtest.txt

[root@localhost Priya]# ll

total 12

-rw-r--r--. 1 root root 0 Feb 4 20:58 newtest.txt

-rw-r--r--. 1 root root 11 Jan 30 21:08 p4

-rw-r--r--. 1 root root 3 Jan 30 21:07 priya

-rw-r--r--. 1 root root 35 Jan 30 21:27 sync.txt

[root@localhost Priya]#

By using s3cmd sync

[root@localhost Priya]# s3cmd sync /home/Priya/ s3://priyaTest/

/home/Priya/newtest.txt -> s3://priyaTest/newtest.txt [1 of 1]

0 of 0 0% in 0s 0.00 B/s done

[root@localhost Priya]#

Dry run command of s3cmd command:

If you want to find out which files will be uploaded into the s3, then we can use s3cmd sync --dry-run

[root@localhost Priya]# vim newtest.txt

[root@localhost Priya]# s3cmd sync --dry-run /home/Priya/ s3://priyaTest/

upload: /home/Priya/newtest.txt -> s3://priyaTest/newtest.txt

WARNING: Exiting now because of --dry-run

And —delete-removed is used to get a list of files that exist remotely but are no longer present locally (or perhaps just have different names here):

[root@localhost Priya]# s3cmd sync --dry-run --delete-removed /home/Priya/ s3://priyaTest/

delete: s3://priyaTest/dsfsd/

delete: s3://priyaTest/newtest.txt

WARNING: Exitting now because of --dry-run

[root@localhost Priya]#

Download from S3

To download from s3, use get command.

[root@localhost home]# s3cmd get -r s3://priyaTest/ /home/

s3://priyaTest/newtest.txt -> /home/newtest.txt [1 of 4]

0 of 0 0% in 0s 0.00 B/s done

s3://priyaTest/p4 -> /home/p4 [2 of 4]

11 of 11 100% in 0s 25.01 B/s done

s3://priyaTest/priya -> /home/priya [3 of 4]

3 of 3 100% in 0s 6.73 B/s done

s3://priyaTest/sync.txt -> /home/sync.txt [4 of 4]

35 of 35 100% in 0s 75.17 B/s done

[root@localhost home]# ll

total 12

-rw-r--r--. 1 root root 0 Feb 6 04:16 newtest.txt

-rw-r--r--. 1 root root 11 Feb 6 04:16 p4

-rw-r--r--. 1 root root 3 Feb 6 04:16 priya

-rw-r--r--. 1 root root 35 Feb 6 04:16 sync.txt

[root@localhost home]#

Use –exclude command – to exclude as per requirement during download.

[root@localhost home]# s3cmd get --force --recursive s3://priyaTest/ --exclude p* /home/

s3://priyaTest/newtest.txt -> /home/newtest.txt [1 of 2]

0 of 0 0% in 0s 0.00 B/s done

s3://priyaTest/sync.txt -> /home/sync.txt [2 of 2]

35 of 35 100% in 0s 77.68 B/s done

[root@localhost home]#s3cmd sync --dry-run --exclude '*.txt' --include 'dir2/*' . s3://s3tools-demo/demo/

exclude: dir1/file1-1.txt

exclude: dir1/file1-2.txt

exclude: file0-2.txt

upload: ./dir2/file2-1.log -> s3://s3tools-demo/demo/dir2/file2-1.log

upload: ./dir2/file2-2.txt -> s3://s3tools-demo/demo/dir2/file2-2.txt

upload: ./file0-1.msg -> s3://s3tools-demo/demo/file0-1.msg

upload: ./file0-3.log -> s3://s3tools-demo/demo/file0-3.log

WARNING: Exiting now because of --dry-run

The line in bold shows a file that has a ,txt extension, ie matches an exclude pattern, but because it also matches the ‘dir2/*’ include pattern, it is still scheduled for upload.

Do let me know if you found this blog helpful. You can share your thoughts in the comments below.