Majority of applications today are designed and built to run over internet and in different browsers, across multiple devices. This creates many more reason and opportunities to conduct performance testing.

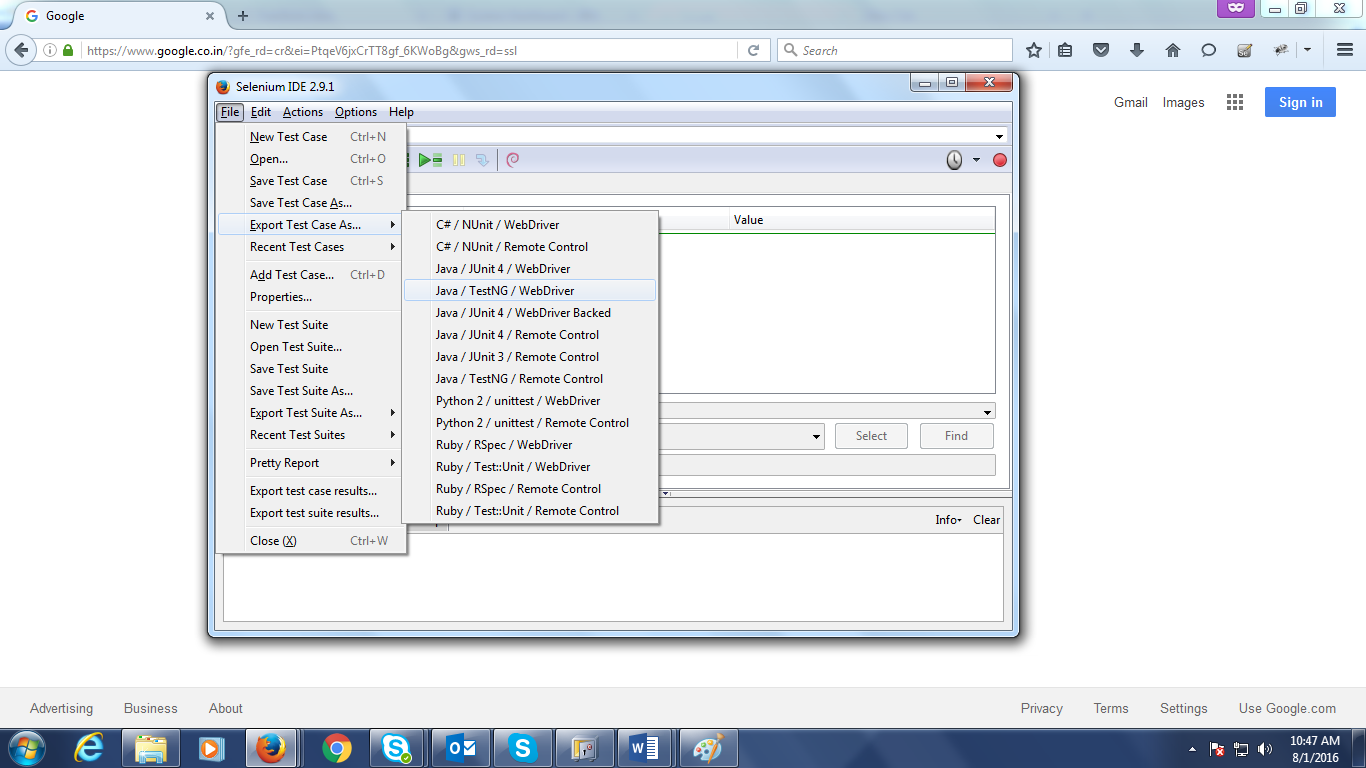

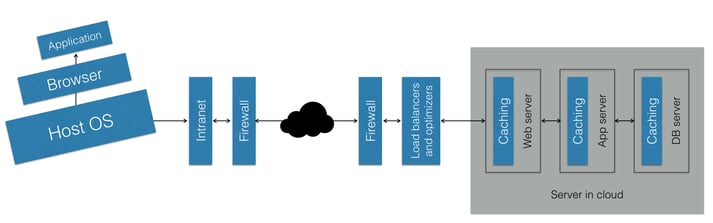

Following figure shows the travel of a typical user input and application response in a cloud hosted web application. The responsiveness of the application is affected all along the line: to and fro.

Every external or internal interface point offers an opportunity for measurement, improvement and fine tuning.

It is important that performance test be carried out in three environments: (a) The agreed optimal environment (most responsive), (b) the actual user environment (expected delivery performance in the field) and (c) the deprived environment (worst performance). In the continuous development and deployment world of today, performance tests are often carried out repeatedly in a specific environment built to meet one of these three environments for lack of time. Network latency, responsiveness are dynamic and change from time to time. A good performance test execution profile will contain not only the three environments for execution but the tests will be performed at different time of the day. By choosing the optimal, average and poor environment at different times, the tester ensures to account for the borderline response conditions in each environment. For example, server CPU performance may degrade significantly when the load threshold is crossed, and it may have different impacts in the three different environments.

After all, the idea of testing is to ensure that the product delivers expected performance in real life uncontrolled conditions. It is therefore crucial to get as close to the real life usage conditions as possible when doing the testing.

It is important to be mindful of the deployment environments for majority users. It may NOT fall midway between the optimal and the worst environments. In fact, in most cases you may find that over 70 (or as high as 90% users tend to have environment poorer than the midway environment).

The practice of sharing the 90 percentile numbers as performance testing results has emerged from this realization. The 90 percentile baseline ensures that expected performance would be delivered to the actual users even with depleted configurations.

Percentile indicates what percentage of the dataset is below the selected number. For example, in a merit list, the first rank holder has 100 percentile because 100 percent of the list is either at or below his level. Similarly, 90 percentile response time is that response time such that 90 percent of the users have same or worse response times. To get this number, sort the response times in descending order and locate the number at 10% from the top of the list. (Microsoft Excel also has a readymade function for percentiles, by the way.)

Following may be considered mandatory hygiene practices in performance testing:

- The testing setup should mimic the actual user settings as closely as possible. This includes at least the network type, bandwidth, connections, hardware resources, processing power. There is little point to test an application using say a peer to peer connection or on intranet or within cloud when the user is expected to use a 3G connection. Good quality performance testing will hold its feet (results) in real life situations.

- The tests should be mindful of the caching layers at every stage. Modern software in order to improve the performance, relies very heavily on caching at every possible level. As an example, modern databases implement a caching layer and will respond to the second query onwards from the cached data until the data state in the cache changes to “dirty cache” (which happens when an edit or an append or a delete is performed on the database. In other words, a commit must be performed to change the cache control checksum, which will change the cache to be “dirty”, forcing a re-read from actual database. Likewise, an application server adds caching layer and so do many applications!)

- Every performance testing cycle should be conducted on vanilla/pristine test environment to avoid any unknown caching performance advantages.

- When databases are involved, concurrent, identical data should be submitted from at least 20% of expected user load.

- Conduct:

- Concurrent user testing (accessing the server AT THE SAME MOMENT)

- Simultaneous user testing (accessing the server around the same timeframe,

but not necessarily at the same moment) - Soak loading (exposing the server to sustained 80% load for prolonged periods of time - typically about 5 times the expected peak load duration)

- Spike loading (reaching the peak load in a very short period of time – typically a few seconds)

- Fatigue testing (repeating the spike load cycles multiple times in reasonably quick succession). This is one of the most important types of testing to unearth memory leaks, slowly terminating background processes/threads and undesired results of cached data or processes

- render testing (testing the browser for UI delivery performance) is not very commonly carried out. However, it is important for applications with complex rendering processes/algorithms

- Workload modeling: load the server with all types of workloads expected to be covered in the application workflow in a simultaneous user testing. This is yet another way to simulate the real world scenarios as closely as possible

Monitoring key performance indicators

After performance tests are conducted, the results must be interpreted. The tester must identify the parameters that will indicate the health of the system. Some of these parameters for example are: CPU usage, Memory usage, Bandwidth consumption, round trip time, overall response time, ping time, data fetch time etc. With many web applications and frameworks using ajax and ajax like functionalities, it is a good idea to identify sections of the webpage that communicate with the server in a group and measure the performance of each section separately and where possible multiple, clubbed sections together.

Tying the KPI values with the load patterns and tests, gives important clues towards architectural, design, semantic and structural weaknesses of the application, including algorithmic inefficiencies.

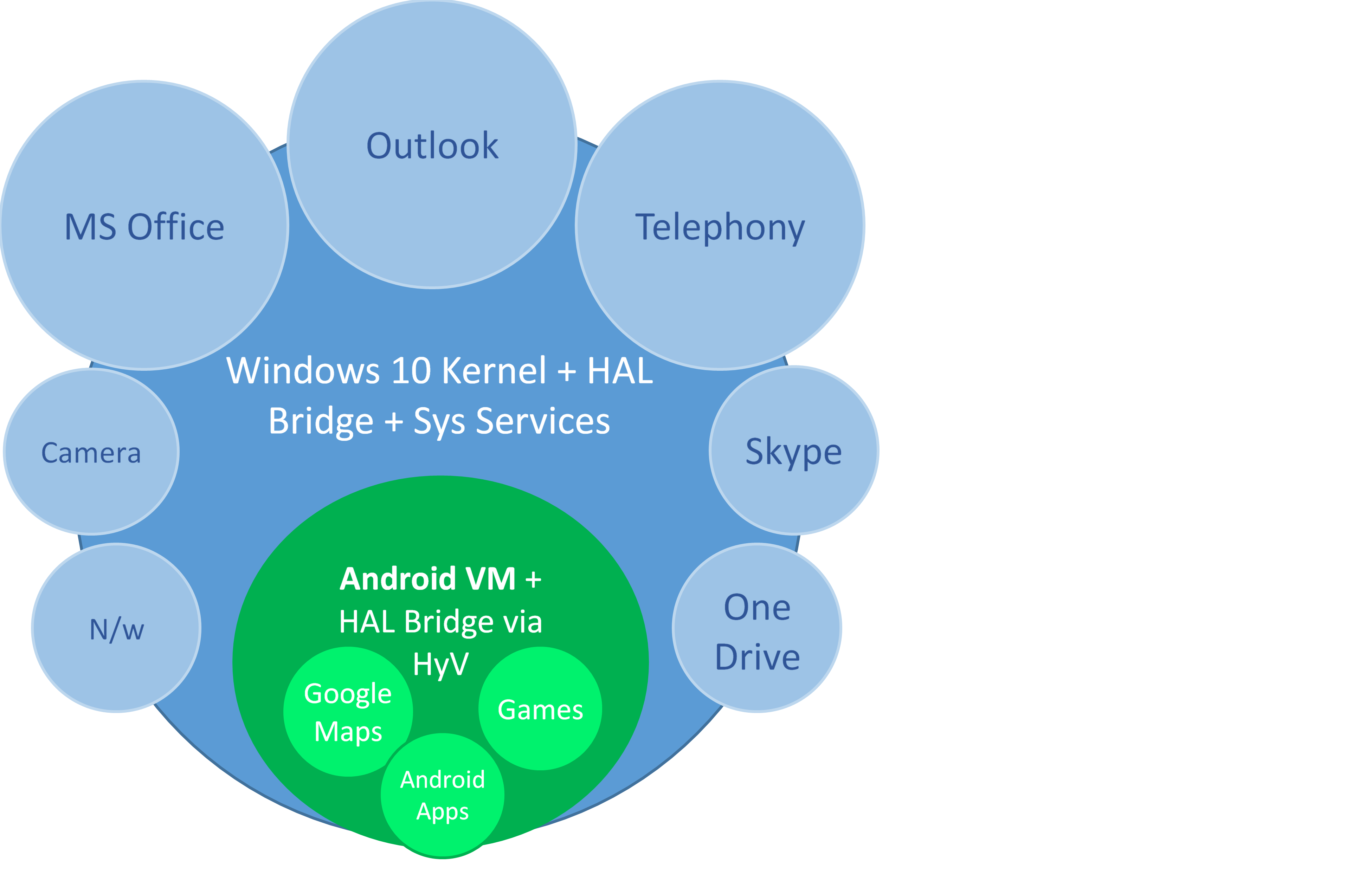

Technology impacts on performance

Testing may not be the best time for decisions about the underlying technologies like frameworks, servers etc. but measuring the impact of optionally available technologies where possible gives important insights into the leverage points, optimization opportunities for the development team and talking points to the marketing and sales team. Where possible, conduct the performance tests on competing platforms, hardware, operating systems and browsers. Study and share the results. Who knows, you may be hinting at a future change in the product you test!

In continuous deployment:

Automated Nightly Builds have been around at least for a decade now. And they have evolved! Some modern ANT environments allow you to include performance testing with check points, data logs and all usual tackles. The ANT result includes results of the performance testing as well.

Sluggishness:

Confirm that that system returns the desired responses within acceptable time under different network conditions and across multiple devices.

Database:

Confirm that database resolves deadlock or system wait conditions quickly and with acceptable and predictable outcomes.

(Look for database connection pool issues, buffer sizes, DB server port setting issues, round trip time between App and DB server, DB cache settings and related likely concerns).